Let us know how we can help

Sales & Pricing

Speak to a rep about your business needs

Help & Support

See our product support options

General inquiries and locations

Contact usWhat Is ETL (Extract, Transform, Load)?

ETL is a foundational process for managing data within modern organizations. Learn what ETL is, how it works, its benefits, and how it compares to related processes like ELT and Reverse ETL.

ETL Definition

ETL (Extract, Transform, Load) is a process that extracts raw data from various sources, transforms it into a usable format, and loads it into a target system such as a data warehouse.

- Extract: Data is collected from sources such as databases, applications, or flat files.

- Transform: The data is cleaned, reformatted, and validated to ensure quality.

- Load: The transformed data is stored in a central repository, ready for analysis and decision-making.

Why do organizations need ETL?

Organizations rely on ETL to create a single source of truth, ensuring that their data is clean, accessible, and actionable. Raw data is often unstructured, inconsistent, or incomplete, making it unusable for effective decision-making. ETL processes address these challenges by consolidating and refining data into a reliable, centralized format.

Improved Data Quality

ETL processes clean, validate, and enrich raw data to remove duplicates, fix inconsistencies, and standardize formats. This ensures businesses are analyzing trustworthy and accurate data.

Centralized Data Access

By consolidating data from different systems into a single location, ETL enables organizations to create a "single source of truth." This eliminates silos and allows departments to access consistent, up-to-date information.

Enhanced Decision-Making

Accurate, unified data empowers leaders to analyze trends, generate insights, and make informed decisions. ETL ensures businesses are working with the best available information to drive strategy.

Scalability and Automation

Modern ETL processes automate repetitive tasks and streamline data pipelines, enabling organizations to scale their data management efforts without excessive manual effort. This becomes essential as businesses scale to manage growing volumes of data efficiently.

To unlock the full value of ETL, organizations often adopt DataOps methodologies, which ensure that data workflows are automated, reliable, and aligned with business needs.

ETL Process

The ETL process is the foundation of effective data management. It involves moving data through three key stages to ensure it is clean, structured, and ready for analysis.

Extract

The first step is to collect data from various sources, such as:

-

Databases (e.g., MySQL, Oracle)

-

APIs and web services

-

Enterprise applications (e.g., CRM, ERP systems)

-

Flat files, spreadsheets, and external data feeds

Transform

Once extracted, the data is cleaned, standardized, and enriched to make it consistent and useful for analysis. Common transformation tasks include:

-

Removing duplicates and correcting inconsistencies

-

Aggregating data to align formats and structures

-

Validating for accuracy and completeness

-

Enriching datasets by merging with external or complementary data sources

Load

Finally, the transformed data is loaded into a target system, such as:

-

Data warehouses (e.g., Snowflake, Redshift) for business intelligence

-

Databases for further analysis or operational use

-

Data lakes for storage and future exploration

The ETL process streamlines data workflows, making it easier for organizations to analyze and leverage data for strategic decisions. Without ETL, raw data would remain fragmented, inconsistent, and difficult to use, limiting the value organizations can derive from their information.

For organizations looking to streamline ETL processes and improve data delivery across teams, DataOps provides a framework for optimizing workflows and achieving end-to-end visibility.

Streamline your data flow with ETL

What is an ETL example?

ETL is widely used across industries. Here’s a practical example of the ETL process:

- Extract: A company pulls customer transaction data from its e-commerce platform, a CRM system, and flat files.

- Transform: Duplicate records are removed, product categories are standardized, and customer regions are tagged.

- Load: The cleaned and formatted data is loaded into a cloud data warehouse such as Snowflake for real-time business analysis.

By combining disparate data, businesses gain actionable insights into customer purchasing trends.

What is ETL in SQL?

ETL in SQL refers to the use of SQL (Structured Query Language) scripts to execute Extract, Transform, Load processes within relational databases. SQL is a powerful tool for managing data workflows due to its ability to efficiently query, transform, and organize structured datasets.

How SQL is used in ETL:

- Extract: Use SQL queries to pull data from multiple tables, databases, or systems.

- Transform: Apply operations such as joins, aggregations, filtering, and data cleansing to refine raw data into a usable format.

- Load: Insert or update the transformed data into reporting tables, data warehouses, or other target systems for analysis.

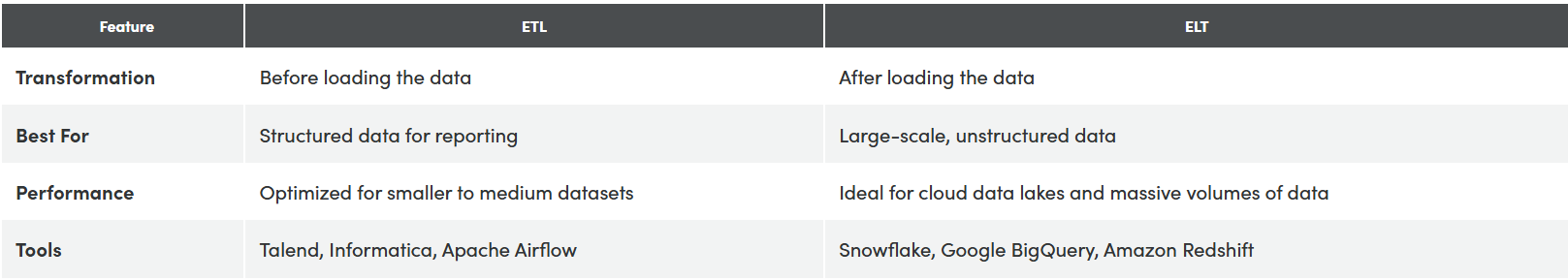

ETL vs ELT

While ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) are similar processes, they differ in the order of operations and their ideal use cases:

- ETL: Data is transformed before being loaded into the target system. Best for structured data and traditional reporting.

- ELT: Data is loaded first and transformed after. Ideal for large, unstructured datasets and modern cloud platforms.

ETL is traditionally suited for on-premises systems and scenarios where data quality and structure are critical before analysis. ELT, on the other hand, excels in cloud-based environments where raw, unstructured data can be transformed flexibly at scale.

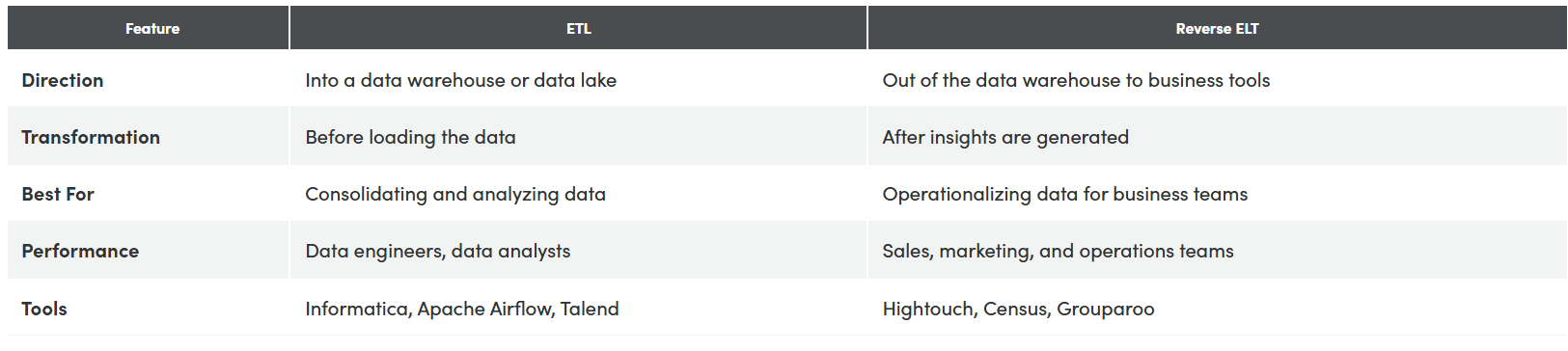

ETL vs Reverse ETL

While ETL and Reverse ETL both involve the movement of data, they work in opposite directions and serve distinct purposes within the data pipeline:

- ETL: Moves data from various sources into a centralized data warehouse or data lake for storage and analysis.

- Reverse ETL: Moves processed insights from the data warehouse back into operational systems, enabling business teams to act on the data.

ETL creates clean, structured data that fuels analytics and reporting. Reverse ETL transforms those insights into actionable outputs for frontline business systems, closing the loop between data analysis and operational execution. Together, ETL and Reverse ETL bridge the gap between data management and data activation, helping organizations make data-driven decisions and drive tangible outcomes.

Both ETL and Reverse ETL benefit from a strong DataOps foundation, which helps automate, monitor, and enhance data movement throughout the pipeline to deliver actionable insights.

BMC products that use ETL

Control-M SaaS

Enables seamless ETL workflow orchestration by automating data extraction, transformation, and loading processes across complex IT environments.

BMC Helix Continuous Optimization

Analyzes capacity data to streamline data transformation and loading into optimized resource environments, ensuring efficiency at scale.

BMC TrueSight

Supports ETL extraction by collecting and monitoring diverse data inputs (logs, events, and metrics) across IT infrastructure for comprehensive analytics.