Let us know how we can help

Sales & Pricing

Speak to a rep about your business needs

Help & Support

See our product support options

General inquiries and locations

Contact us

Redirecting…

Select your languages

Based on your browser's settings, we noticed you might prefer to view this site in a different language.

We use AI tools to help make our content available in multiple languages. Because these translations are automated, there may be some variation between the English and translated versions. The English version of this content is the official version. Contact BMC to talk to an expert who can answer any questions you may have.

What Is Data Extraction? Definition, Tools & Methods

Fundamental to data management, data extraction consolidates data for subsequent analysis and informed decision-making.

Definition

What is data extraction?

Data extraction is the process of identifying, retrieving, and replicating raw data from various sources into a target repository. It is the first step in ETL and ELT processes, gathering data for deeper analysis and insights.

BMC Tools with Data Extraction Capabilities

Control-M

Comprehensive data pipeline orchestration is just one powerful capability that keeps your business running smoothly, giving you confidence at every step.

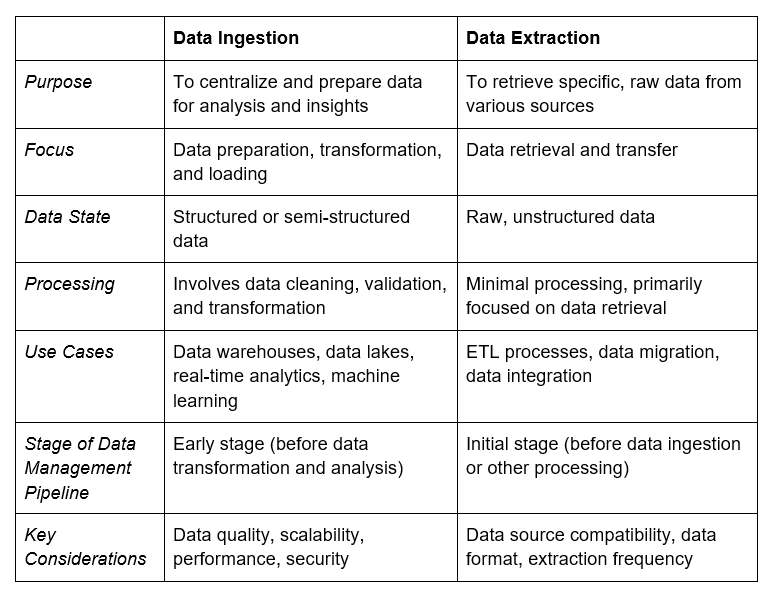

Learn moreWhat is the difference between data ingestion vs. data extraction?

Data extraction involves retrieving specific, raw data from disparate sources (e.g., spreadsheets, sensors, transactional systems) ahead of processing and utilization.

Data ingestion centralizes and prepares datasets for different applications, with the goal of creating actionable insights (e.g., reports, real-time data consolidation).

Data Extraction Methods

Full Data Extraction

Full data extraction retrieves an entire dataset from a source system. It is often required during initial data extraction from a particular source, but it can overload the network, especially if conducted multiple times.

Partial Data Extraction

Partial data extraction is more selective. It’s preferred when the entire dataset is irrelevant to the project or outcomes. It produces less strain on the network compared to full data extraction.

Incremental Data Extraction

Incremental data extraction identifies and transfers only the data that has been modified since the last extraction, making it the preferred choice for ongoing data synchronization.

Manual Data Extraction

Manual data extraction typically involves copying and pasting data from one source to another. It is no longer recommended for most businesses but can occasionally be used for smaller extractions.

Update Notification Data Extraction

Update notification data extraction (e.g., webhooks, change data capture) involves getting notified when data records have been changed. This can be useful in preparing data for real-time analysis.

Physical Data Extraction

Physical data extraction is used to extract data from physical storage devices. It may involve data extraction from both online or offline sources (e.g., non-connected physical sensors).

Types of Big Data Extraction Tools

ETL Tools

Automated solutions that streamline the extraction, transformation, and loading of data, improving efficiency and data quality.

Batch Processing Tools

Efficient tools designed to extract large volumes of data in scheduled batches, optimizing resource utilization and minimizing system impact.

Open-Source Tools

Customizable and cost-effective tools that require technical expertise to implement and maintain, offering flexibility and community support.

Process

What is the data extraction process?

Step 1: Validate Data and Clean Data Regularly

When possible, implement practices to regularly clean and validate data upon capture. This helps to reduce errors and inconsistencies, which can complicate other data management processes later on.

Step 2: Identify and Locate the Data to Extract

Based on the desired data analysis outputs, determine what types of data are needed for the extraction. Specific types of data may include customer data, financial data, or performance data.

You will also need to locate where this data exists, which could be located within one source or multiple sources, including spreadsheets, sensors, images, webpages, and beyond.

Step 3: Identify Data Changes

If you are conducting incremental data extractions, you may need to identify what changes were made in the data. This may include detecting what data points or datasets have been modified, added, or deleted.

To simplify this process, you can set up notifications and alerts.

Step 4: Determine Where to Store the Data

The next step of the data extraction process is to choose a destination for the extracted data. This is a crucial component since the source system, data extraction software, and target system will need to be connected for the process to work.

In most cases, your destination will be a data warehouse or a system used for business intelligence reporting.

Step 5: Initiate the Data Extraction Process

In the case of initial data extractions, a full extraction may be necessary. However, in subsequent data extractions, you may only need to do a partial or incremental data extraction.

Regardless of which types of data extraction process you are using, the data will be retrieved and eventually transferred to the final destination.

Step 6: Continue with a Comprehensive Data Management Plan

While data extraction can be isolated in some cases, conducting data extraction without any further processing does not provide actionable insights.

ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes are often the next step. These processes will ensure the raw data is converted into something that can be used for analysis and business intelligence insights.

Step 7: Document, Test, and Audit Regularly

To ensure efficiency, accuracy – and ultimately – the most high-quality data extraction process, it is important to regularly evaluate the data extraction pipeline, including maintaining detailed logs about how data is updated, changed, and extracted.

Step 1: Validate Data and Clean Data Regularly

When possible, implement practices to regularly clean and validate data upon capture. This helps to reduce errors and inconsistencies, which can complicate other data management processes later on.

Step 2: Identify and Locate the Data to Extract

Based on the desired data analysis outputs, determine what types of data are needed for the extraction. Specific types of data may include customer data, financial data, or performance data.

You will also need to locate where this data exists, which could be located within one source or multiple sources, including spreadsheets, sensors, images, webpages, and beyond.

Step 3: Identify Data Changes

If you are conducting incremental data extractions, you may need to identify what changes were made in the data. This may include detecting what data points or datasets have been modified, added, or deleted.

To simplify this process, you can set up notifications and alerts.

Step 4: Determine Where to Store the Data

The next step of the data extraction process is to choose a destination for the extracted data. This is a crucial component since the source system, data extraction software, and target system will need to be connected for the process to work.

In most cases, your destination will be a data warehouse or a system used for business intelligence reporting.

Step 5: Initiate the Data Extraction Process

In the case of initial data extractions, a full extraction may be necessary. However, in subsequent data extractions, you may only need to do a partial or incremental data extraction.

Regardless of which types of data extraction process you are using, the data will be retrieved and eventually transferred to the final destination.

Step 6: Continue with a Comprehensive Data Management Plan

While data extraction can be isolated in some cases, conducting data extraction without any further processing does not provide actionable insights.

ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes are often the next step. These processes will ensure the raw data is converted into something that can be used for analysis and business intelligence insights.

Step 7: Document, Test, and Audit Regularly

To ensure efficiency, accuracy – and ultimately – the most high-quality data extraction process, it is important to regularly evaluate the data extraction pipeline, including maintaining detailed logs about how data is updated, changed, and extracted.

By conquering raw data chaos and extracting actionable intelligence, enterprises gain a competitive edge, scale with efficiency, and maintain a stronghold on their market.

Resources

Topics Related to Data Extraction

Data Extraction and ETL

Discover how data extraction, the first step in the ETL process, unlocks the power of your data and sets the stage for informed decision-making.

Learn moreA Big Introduction to Big Data

Understand the 3V’s of big data, its core concepts, and the latest big data trends in business so you stay in-the-know.

Learn moreBig Data: A Big Introduction

Understand the 3V’s of big data, its core concepts, and the latest big data trends in business so you stay in-the-know.

Learn moreFrequently Asked Questions

What is an example of data extraction?

Data extraction is used in a variety of data management use cases and initiatives. Here is an example use case to consider:

A large ecommerce company wants to optimize its customer retention strategy. They extract customer data from their sales database, including purchase history, demographics, and customer service interactions.

Although not part of the data extraction process itself, this extracted data is then cleaned, standardized, and integrated with other data sources, such as website analytics and social media interactions.

By analyzing this comprehensive dataset, the company can identify trends, segment customers, and implement targeted marketing campaigns to increase customer loyalty and drive sales.

What are two types of data extraction?

Two common data extraction techniques fall under the umbrella of “logical extraction.”

- Full Extraction: This method involves extracting all data from a source system at once. It is ideal for initial data extraction or when complete data refreshes are necessary. However, it can be time-consuming, especially for large datasets, and is not recommended as a long-term data extraction strategy.

- Partial Extraction: This approach extracts pre-selected data based on a project or need. It's more efficient and reduces the load on source systems, making it suitable for subsequent or project-based data synchronization. However, this is not to be confused with incremental data extraction.

What do you mean by “extracted data?”

Extracted data refers to the raw, unprocessed data that has been retrieved from various sources and isolated for further processing.

Upon extraction, the data is often unstructured or semi-structured, requiring subsequent transformation and cleaning before it can be used for analysis or reporting.

The purpose of extracted data is to consolidate information from disparate systems to serve the end-goal of gaining valuable insights.

Can data be extracted outside of the ETL or ELT processes?

Yes, data can be extracted outside of the ETL or ELT processes. However, extracted data by itself – without the subsequent transformation and loading steps – is often raw and unstructured. This greatly limits its usefulness for analysis and decision-making.

In some cases, data is extracted (without transformation or loading) for archival or compliance purposes. However, its full potential is realized when it's integrated into the broader data pipeline.

What is data extraction versus data mining?

While both terms may seem interchangeable, these are two different processes with different outputs and purposes.

Data extraction focuses on retrieving raw data from disparate sources – without inherently offering any sort of analysis, intelligence, or insights. In other words, the end product of data extraction is raw data.

Data mining, on the other hand, goes one step beyond data extraction. Using extracted data, data mining enables a certain degree of analysis, trend reporting, and insights. The end product of data mining is actionable intelligence.

Frequently Asked Questions

What is an example of data extraction?

Data extraction is used in a variety of data management use cases and initiatives. Here is an example use case to consider:

A large ecommerce company wants to optimize its customer retention strategy. They extract customer data from their sales database, including purchase history, demographics, and customer service interactions.

Although not part of the data extraction process itself, this extracted data is then cleaned, standardized, and integrated with other data sources, such as website analytics and social media interactions.

By analyzing this comprehensive dataset, the company can identify trends, segment customers, and implement targeted marketing campaigns to increase customer loyalty and drive sales.

What are two types of data extraction?

Two common data extraction techniques fall under the umbrella of “logical extraction.”

- Full Extraction: This method involves extracting all data from a source system at once. It is ideal for initial data extraction or when complete data refreshes are necessary. However, it can be time-consuming, especially for large datasets, and is not recommended as a long-term data extraction strategy.

- Partial Extraction: This approach extracts pre-selected data based on a project or need. It's more efficient and reduces the load on source systems, making it suitable for subsequent or project-based data synchronization. However, this is not to be confused with incremental data extraction.

What do you mean by “extracted data?”

Extracted data refers to the raw, unprocessed data that has been retrieved from various sources and isolated for further processing.

Upon extraction, the data is often unstructured or semi-structured, requiring subsequent transformation and cleaning before it can be used for analysis or reporting.

The purpose of extracted data is to consolidate information from disparate systems to serve the end-goal of gaining valuable insights.

Can data be extracted outside of the ETL or ELT processes?

Yes, data can be extracted outside of the ETL or ELT processes. However, extracted data by itself – without the subsequent transformation and loading steps – is often raw and unstructured. This greatly limits its usefulness for analysis and decision-making.

In some cases, data is extracted (without transformation or loading) for archival or compliance purposes. However, its full potential is realized when it's integrated into the broader data pipeline.

What is data extraction versus data mining?

While both terms may seem interchangeable, these are two different processes with different outputs and purposes.

Data extraction focuses on retrieving raw data from disparate sources – without inherently offering any sort of analysis, intelligence, or insights. In other words, the end product of data extraction is raw data.

Data mining, on the other hand, goes one step beyond data extraction. Using extracted data, data mining enables a certain degree of analysis, trend reporting, and insights. The end product of data mining is actionable intelligence.

Connect with an expert.

Thank you!

One of our specialists will be in touch shortly.